@danielwilson

f53c192565fb93b7f43a879ba005b14afa83069ebaa03877786c9c82d49d5937Apr 24, 2023, 02:31:08

@_𝐚𝐤𝐡𝐚𝐥𝐢𝐪 𝐭𝐰𝐞𝐞𝐭𝐞𝐝

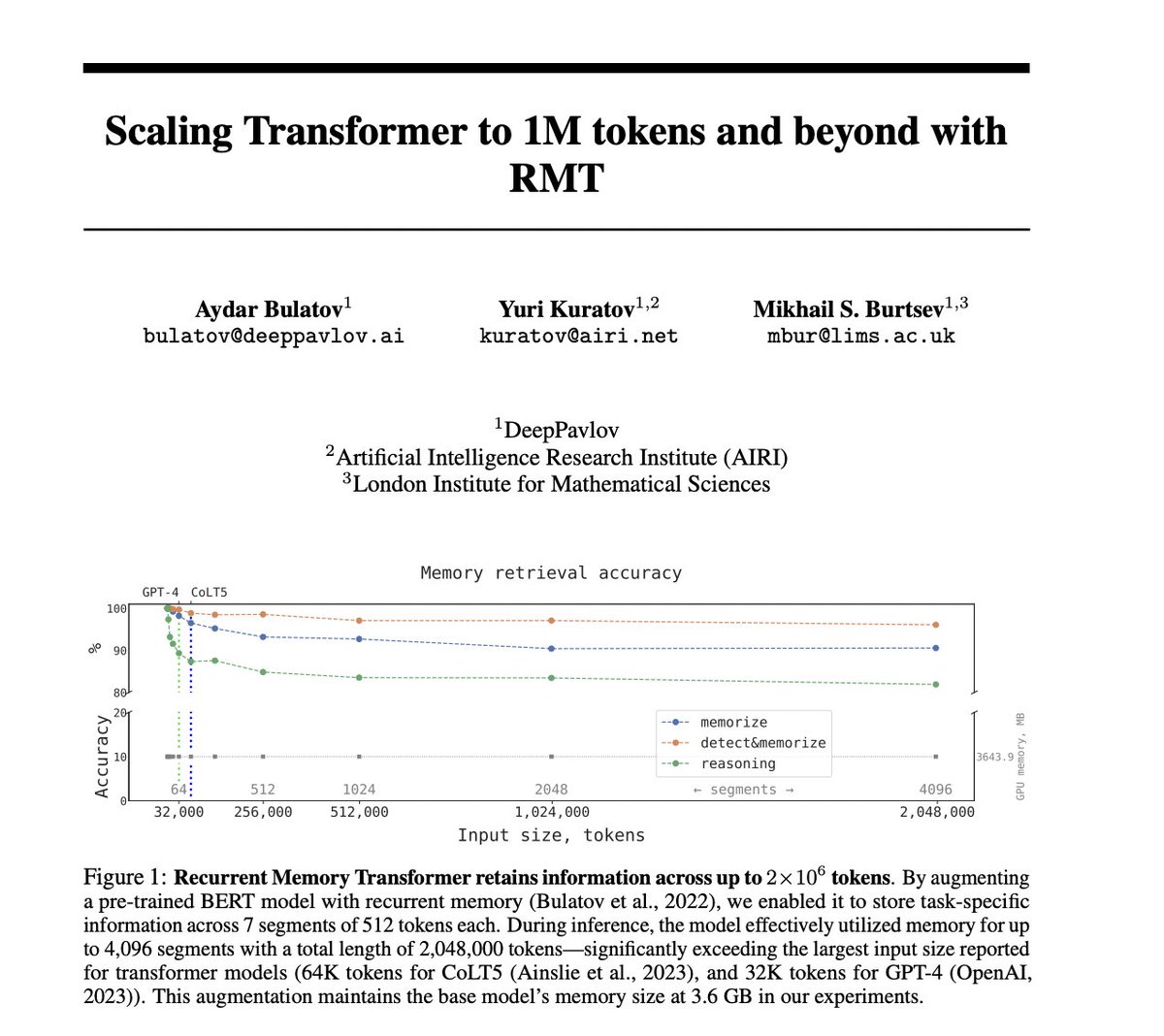

Scaling Transformer to 1M tokens and beyond with RMT

Recurrent Memory Transformer retains information across up to 2 million tokens.

During inference, the model effectively utilized memory for up to 4,096 segments with a total length of 2,048,000 tokens—significantly exceeding… twitter.com/i/web/status/1650308865555148800

0

0

21

4

Calculating...